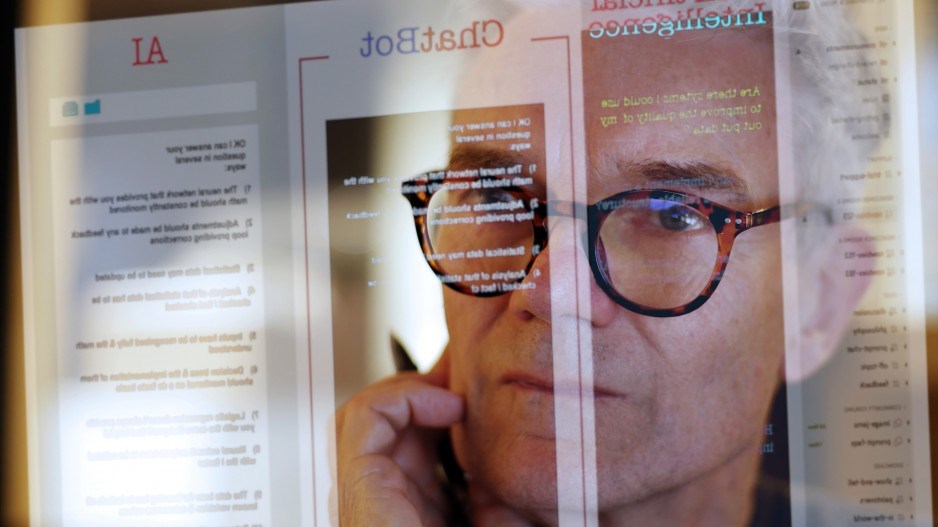

We often fear what we do not understand. If there is an emergent unease I pick up on these days in our business community – now that the pandemic isn’t so top of mind – it concerns the sudden popularization and presence of artificial intelligence and where we may be taking each other.

ChatGPT is the AI culprit in this fear because it’s a spectacularly accessible, steroidal solution to tell us what we want to know and what we wish to create. I’ve pretty much stopped using some search engines in order to use a small-fee ChatGPT instead.

Of course AI has been present for as long as I’ve been alive (don’t ask, but it’s ample). It’s just that it feels increasingly like it’s time, perhaps because of the ChatGPT publicity, to put it on the main stage. It has the same feel that we experienced with the arrival of the World Wide Web, although as Sanctuary AI CEO Geordie Rose told me on a podcast recently, this is bigger than the arrival of the steam engine.

All sorts of entities, including media, are working through their approaches on how best to capitalize on the breathtaking technology without getting the breath knocked out of us along this path. (I’ve asked ChatGPT to write a column in my voice, but thankfully it couldn’t corner the grumpiness. That being said, I’ve gotten some nice poetry out of it.)

As far as I can tell, at the apex of public concerns are three things: That machine learning will subsume the collective knowledge and agency of eight billion people (not going to happen, Rose says), that a lot of us will lose our jobs to a robot (not likely) and that how we use AI will be flubbed either by permitting it fall into the wrong hands or evolve without appropriate guardrails on its many risks (a little more possibly).

It was redeeming, given the stage we find ourselves, to read a thoughtful report in recent weeks from the Canadian Institute for Advanced Research (CIFAR) that tries to meet us where we are on AI anxiety and activity.

Where we are is in “play mode” with ChatGPT but not particularly concerned about dealing with malicious use, mitigating any built-in bias in how AI functions, or in ensuring greater transparency in the collection of data.

In analyzing nearly seven million posts on social media concerning AI over the last two years and search engines in the last five, the research in the Incautiously Optimistic report found about a two-to-one ratio of positive to negative discourse. My small focus group of businesspeople is a little more concerned than is the general public.

Elissa Strome, the executive director of CIFAR’s Pan-Canadian AI strategy, isn’t necessarily ringing the alarm bell quite yet, but she’s concerned that the research indicates Canadians are not sufficiently informing themselves on AI. Consider this a yellow light at the intersection.

The novel nature of ChatGPT has us a bit off-course, she adds, in which we’re fascinated with the results without focusing on the possible biases in the ingredients that produced them. We’re proceeding without fully comprehending what we’re sharing as we do.

“It’s time to incorporate critical thinking,” she says.

To that end CIFAR has rolled out a free course I’d recommend to get the novice up to speed and to serve as a good check on the basics for anyone with some understanding of the rapidly advancing tech. Its Destination AI online tutorial (cifar.ca/ai/destinationai) takes a few hours but helped me understand the terminology, back up what Rose said was an unnecessary fear about AI taking over the world, deal with the ethical challenges of privacy, disinformation, bias and decision-making, and arguably most importantly see the big picture on its potential.

CIFAR has been a great resource in defining and advising on some of the contemporary challenges of AI. It produced a wise report in July from researchers at Simon Fraser University and University of British Columbia that identified a dozen fields in which regulation or other forms of guardrails will be advisable in the minefield of health data across provinces and territories so they can collaborate while mitigating privacy risks.

Once again, we find ourselves facing an advancement so substantial that it calls upon us not be left behind. Schools return this week; all of us might as well get into learning mode.

Kirk LaPointe is publisher and executive editor of BIV and vice-president, editorial, of Glacier Media.